We met with Louis Laszlo, VP Product Management at Atempo to talk about Atempo news in the HPC world.

Lots of HPC centers run very few backup and archiving jobs. Is this changing?

This used to be the case for high performance computing centers, but today backups and archiving are gaining traction in this sector due to fundamental changes within the HPC environment in recent years. Let me start by explaining the reasons behind these changes.

First, technology and users have matured: HPC centers are not just about computing anymore. Growing numbers of researchers need to perform Artificial Intelligence (AI) and Machine Learning (ML) workloads on HPC clusters or mix them with computing. The HPC cluster has new, hybrid usages and needs: for example, adding specialized nodes dedicated to data management or image processing with Data Processing Units (DPU) and Graphical Processing Units (GPU).

ML implies reusing data sets and enriching them. More and more scientific or industrial projects have existing data to analyze and correlate. This hybridization of the HPC clusters also impacts storage; there is more data movement involved between the HPC storage tiers, from home to scratch and back, along with additional archive requirements. Also, this is not happening behind closed doors. Scientists clearly see the need for more collaboration, more shared data, and sharing archives internally and externally.

Is energy consumption a growing concern in today’s HPC environments?

Yes, absolutely. HPC centers are extremely energy intensive with their high density of compute nodes producing considerable heat and relying on sophisticated cooling systems. With the cost of energy now a real issue in Europe, and with climate change considerations to manage, energy consumption is now an essential HPC center metric. Customers and partners are reporting that HPC centers are already evolving, without waiting for future energy transitions. To reduce their energy consumption, they limit unused resources, consolidate servers and storage, and apply a more selective approach to computing, preferring more frequent archiving of computing results.

How does Miria support evolving HPC centers?

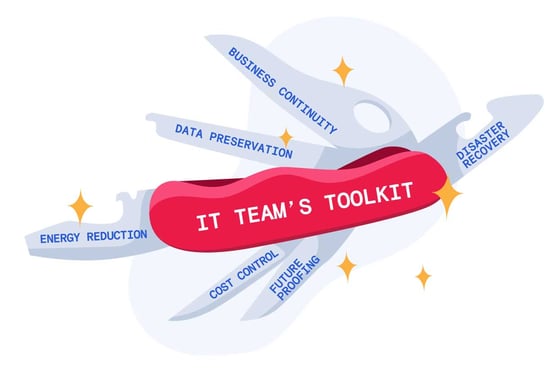

Miria can help in multiple ways. First, with its archiving capability. Archiving older data reduces the massive volume of home and work storage tiers: this reduces the global volume of data stored and the volume of data to back up. Put simply, when you have less data to store, you reduce storage costs and simplify the IT team's data protection and storage tasks.

Contrary to popular belief, archiving does not need to be complex and archaic. Modern archiving is web-based and completely accessible to non-IT users. We have many HPC research scientists happily using our web interface to drag and drop data into an archive and enrich it with metadata while, in the background, Miria applies the rules predefined by the IT team – for instance creating multiple copies on different targets automatically, preventing data modifications, etc. Several HPC applications, such as autonomous vehicles, finance, nuclear energy, and more, have the constraint of preserving all data and projects for decades and find Miria’s Archiving support of object lock in cloud or object storage a very cost-effective solution for their needs.

Backup is another stand-out Miria feature. Miria provides petabyte and beyond data set protection! Our software has advanced integration with most parallel filesystems and HPC storages such as GPFS, Lustre, Qumulo, Isilon, or Vast, to name but a few. Aptly named, “FastScan”, this smart integration enables our incremental backup to quickly identify new and changed data without doing a lengthy complete crawling of the file system--Backups complete faster. This, plus our ability to consolidate incremental backups to an object or cloud storage also drastically reduces the volume of data transferred and stored for disaster recovery: Miria only performs incremental backups (forever incremental mode - Read our blog post about the University of Lausanne to know more about this) and rebuilds a full backup on-demand whenever you want it.

A final important point regarding backup; with current levels of ransomware attacks, HPC centers need a backup system that not only scales but also offers proper air-gap protection at scale via cloud, or tape or even WORM tape media.

Additionally, let’s talk about Miria’s Migration service. HPC data centers frequently need upgrading or complete replacement of their current storage with a more energy-friendly solution. At petabyte scale, these projects are never entirely straightforward. Miria’s migration feature has proved highly efficient and reliable in delivering on-time storage upgrade projects. - Read our blog post titled "Replacing your file storage" for more information.

Lastly, honorable mention for Miria’s two remaining services, Mobility and Analytics, which are key to rounding out this all-in-one platform:

- Our Mobility service can help empower scientists and research users with a solid, reliable, secure solution to move data efficiently between storage tiers. The positive takeaway? No more delays on your compute schedule while waiting for an unfinished data transfer, no more need for multiple transfer attempts. It also frees up the IT team from having to do these tasks. I’m happy to share this piece of good news. - Read our blog post titled "Is achieving self-service HPC data mobility in small IT teams a reality?" for more information.

- Our Analytics module has gotten a lot of interest from IT resources in charge of managing HPC It consolidates an overview of all your file storages, making it simple to identify cold data or other opportunities for storage consolidation.

In a nutshell, hybrid HPC centers need more data management solutions than the initial pure HPC centers. Hybrid HPC centers use, produce, move, re-use more data than ever before and, must accomplish this by reducing their energy footprint while keeping their data and users safe. Sounds like a perfect mission for Miria!